You must be wondering what is AWS SDK?

The AWS SDK for JavaScript enables you to directly access AWS services from JavaScript code running in the browser. Authenticate users through Facebook, Google, or Login with Amazon using web identity federation. Store application data in Amazon DynamoDB, and save user files to Amazon S3.

- Direct calls to AWS services mean no server-side code (Apex) is needed to consume AWS APIs.

- Using nothing but common web standards - HTML, CSS, and JavaScript - you can build full-featured dynamic browser applications.

- No Signature Version 4 Signing Process is needed as SDK does it for you internally.

Prerequisite: You should have

- AWS Account

- S3 bucket configured

- AWS Access Key Id

- AWS Secret Access Key

Its demo time:

In order to make this demo simple, I have created a file upload component where in a button click I am uploading the file to AWS S3 bucket.

All AWS related configuration are kept in a custom metadata type called AWS_Setting__mdt.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

<!-- fileupload_aws_s3bucket.html -->

<template>

<lightning-card variant="Narrow" title="AWS S3 File Uploader" style="width:30rem" icon-name="action:upload">

<div class="slds-m-top_medium slds-m-bottom_x-large">

<!-- Single file -->

<div class="slds-p-around_medium lgc-bg">

<lightning-input type="file" onchange={handleSelectedFiles}></lightning-input>

{fileName}

</div>

<div class="slds-p-around_medium lgc-bg">

<lightning-button class="slds-m-top--medium" label="Upload to AWS S3 bucket" onclick={uploadToAWS}

variant="brand">

</lightning-button>

</div>

</div>

<template if:true={showSpinner}>

<lightning-spinner size="medium">

</lightning-spinner>

</template>

</lightning-card>

</template>

fileupload_aws_s3bucket.js : Code is very self -explanatory but I am trying to explain it.

At first we need add AWS SDK JS file in a Salesforce static resource. Here is the link of AWS SDK JS file.

To use a JavaScript library from a third-party site, add it to a static resource, and then add the static resource to our component. After the library is loaded from the static resource, we can use it as normal.Then use import { loadScript } to load the resources in LWC component in renderedCallback hook.

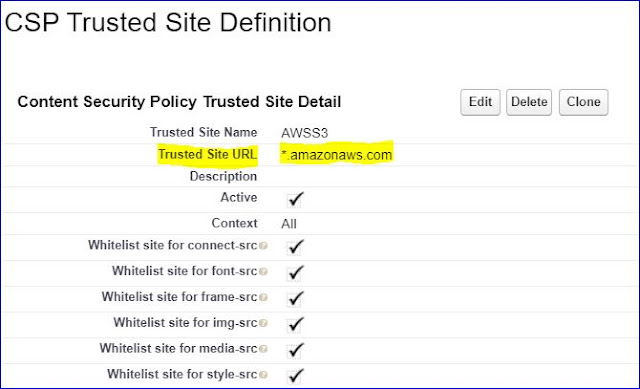

By default, we can’t make WebSocket connections or calls to third-party APIs from JavaScript code. To do so, add a remote site as a CSP Trusted Site.In this case I have added *.amazonaws.com in CSP. Special thanks to Mohith (@msrivastav13) who helped me to point out what domain/url to be added under CSP.

The Lightning Component framework uses Content Security Policy (CSP), which is a W3C standard, to control the source of content that can be loaded on a page. The default CSP policy doesn’t allow API calls from JavaScript code. We change the policy, and the content of the CSP header, by adding CSP Trusted Sites.

Once SDK loaded, I am fetching AWS related configuration from AWS_Setting__mdt by assigning metadata record Id to awsSettngRecordId (its both dynamic and reactive) which interns invoke @wire service to provisions data.

From @wire service, initializeAwsSdk method is getting called to initialize AWS SDK based upon configuration data received.

Since SDK is properly initialized, now we are ready to upload documents. On click event from button I am uploading documents through uploadToAWS method. Then it is actually calling SDK's predefined method putObject in order to upload the document to S3 bucket. Please refer AWS.S3 for more information related to supported methods by the SDK .

Please Note : Access Id and Secret Key is open for all to see.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 | /* fileupload_aws_s3bucket.js */ /* eslint-disable no-console */ import { LightningElement, track, wire } from "lwc"; import { getRecord } from "lightning/uiRecordApi"; import { loadScript } from "lightning/platformResourceLoader"; import AWS_SDK from "@salesforce/resourceUrl/AWSSDK"; export default class Fileupload_aws_s3bucket extends LightningElement { /*========= Start - variable declaration =========*/ s3; //store AWS S3 object isAwsSdkInitialized = false; //flag to check if AWS SDK initialized @track awsSettngRecordId; //store record id of custom metadata type where AWS configurations are stored selectedFilesToUpload; //store selected file @track showSpinner = false; //used for when to show spinner @track fileName; //to display the selected file name /*========= End - variable declaration =========*/ //Called after every render of the component. This lifecycle hook is specific to Lightning Web Components, //it isn’t from the HTML custom elements specification. renderedCallback() { if (this.isAwsSdkInitialized) { return; } Promise.all([loadScript(this, AWS_SDK)]) .then(() => { //For demo, hard coded the Record Id. It can dynamically be passed the record id based upon use cases this.awsSettngRecordId = "m012v000000FMQJ"; }) .catch(error => { console.error("error -> " + error); }); } //Using wire service getting AWS configuration from Custom Metadata type based upon record id passed @wire(getRecord, { recordId: "$awsSettngRecordId", fields: [ "AWS_Setting__mdt.S3_Bucket_Name__c", "AWS_Setting__mdt.AWS_Access_Key_Id__c", "AWS_Setting__mdt.AWS_Secret_Access_Key__c", "AWS_Setting__mdt.S3_Region_Name__c" ] }) awsConfigData({ error, data }) { if (data) { let awsS3MetadataConf = {}; let currentData = data.fields; //console.log("AWS Conf ====> " + JSON.stringify(currentData)); awsS3MetadataConf = { s3bucketName: currentData.S3_Bucket_Name__c.value, awsAccessKeyId: currentData.AWS_Access_Key_Id__c.value, awsSecretAccessKey: currentData.AWS_Secret_Access_Key__c.value, s3RegionName: currentData.S3_Region_Name__c.value }; this.initializeAwsSdk(awsS3MetadataConf); //Initializing AWS SDK based upon configuration data } else if (error) { console.error("error ====> " + JSON.stringify(error)); } } //Initializing AWS SDK initializeAwsSdk(confData) { const AWS = window.AWS; AWS.config.update({ accessKeyId: confData.awsAccessKeyId, //Assigning access key id secretAccessKey: confData.awsSecretAccessKey //Assigning secret access key }); AWS.config.region = confData.s3RegionName; //Assigning region of S3 bucket this.s3 = new AWS.S3({ apiVersion: "2006-03-01", params: { Bucket: confData.s3bucketName //Assigning S3 bucket name } }); this.isAwsSdkInitialized = true; } //get the file name from user's selection handleSelectedFiles(event) { if (event.target.files.length > 0) { this.selectedFilesToUpload = event.target.files[0]; this.fileName = event.target.files[0].name; console.log("fileName ====> " + this.fileName); } } //file upload to AWS S3 bucket uploadToAWS() { if (this.selectedFilesToUpload) { this.showSpinner = true; let objKey = this.selectedFilesToUpload.name .replace(/\s+/g, "_") //each space character is being replaced with _ .toLowerCase(); //starting file upload this.s3.putObject( { Key: objKey, ContentType: this.selectedFilesToUpload.type, Body: this.selectedFilesToUpload, ACL: "public-read" }, err => { if (err) { this.showSpinner = false; console.error(err); } else { this.showSpinner = false; console.log("Success"); this.listS3Objects(); } } ); } } //listing all stored documents from S3 bucket listS3Objects() { //console.log("AWS -> " + JSON.stringify(this.s3)); this.s3.listObjects((err, data) => { if (err) { console.log("Error", err); } else { console.log("Success", data); } }); } } |